- #PENTAHO DATA INTEGRATION REPOSITORY ARCHIVE IS PRODUCED#

- #PENTAHO DATA INTEGRATION REPOSITORY FULL CONFIGURATION AND#

Pentaho Data Integration Repository Archive Is Produced

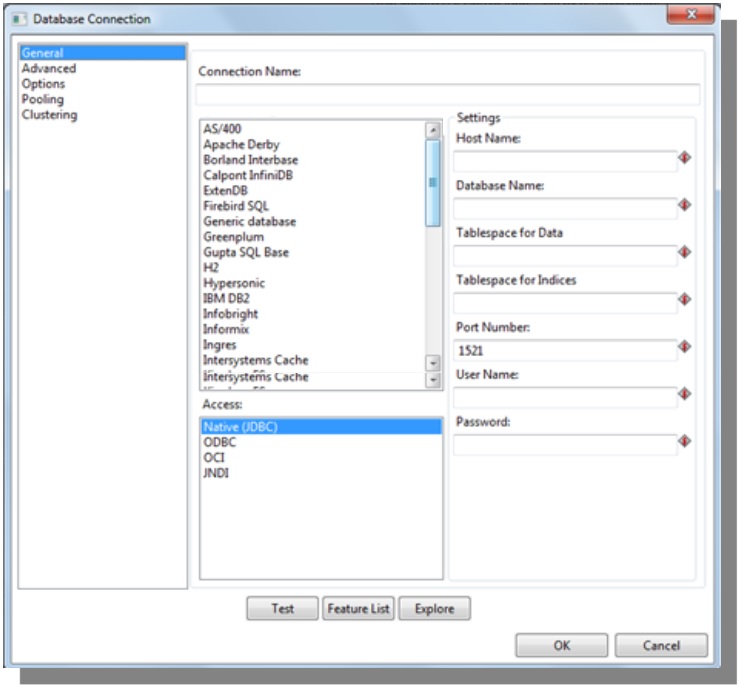

Assemblies: Project distribution archive is produced under this module core: Core implementation dbdialog: Database dialog ui: User interface engine: PDI engine engine-ext: PDI engine extensions plugins: PDI core plugins integration: Integration tests How to buildDocker image for Pentaho Data Integration (PDI), also known as Kettle, Community Edition. Pentaho Data Integration ( ETL ) a.k.a Kettle. This step executes a Pentaho Data Integration transformation.Pentaho Data Integration. Use Pentaho Repositories in Pentaho Data IntegrationDescription: Docker image for Pentaho Data IntegrationCentral Repository It is used to control the version management of the objects and is. Pentaho Data Integration(PDI) provides the Extract, Transform, and Load (ETL) capabilities that facilitate the process of capturing, cleansing, and storing data using a uniform and consistent format that is accessible and relevant to end users and IoT technologies.

CARTE_USER: The username for this node (default: cluster) CARTE_PORT: The port to listen to (default: 8080) CARTE_NETWORK_INTERFACE: The network interface to bind to (default: eth0)

CARTE_MASTER_PORT: The port of the master ndoe (default: 8080) CARTE_MASTER_HOSTNAME: The hostname of the master node (default: localhost) CARTE_MASTER_NAME: The name of the master node (default: carte-master) CARTE_REPORT_TO_MASTERS: Whether to notify the defined master node that this node exists (default: Y) CARTE_INCLUDE_MASTERS: Whether to include a masters section in the Carte configuration (default: N)If CARTE_INCLUDE_MASTERS is 'Y', then these additional environment variables apply: CARTE_IS_MASTER: Whether this is a master node (default: Y)

Note that the path base_directory must be defined in the context of the Docker image, not the host machine. Copy the transformation and job files into the image, along with any Kettle configurations required, and run Pan or Kitchen with the appropriate command line options.For example, in order to run jobs and transformations from a file-based repository, the repository location first needs to be set in the file KETTLE_HOME/.kettle/repositories.xml. Using cron or Chronos).To do this, create a Dockerfile with this image in the FROM command. This is useful for packaging ETL scripts as Docker images, and running these images on a schedule (e.g.

Pentaho Data Integration Repository Full Configuration And

Sh extension, and copy them to the /docker-entrypoint.d folder. For example, a script can be used to clone a Git repository containing the transformations and jobs to be run.To use custom scripts, name them with a. Running Custom ScriptsThis image allows for full configuration and customisation via custom scripts. Er build -t pdi_myrepoThe same image can also be used to run any arbitrary job or transformation in the repository: docker run -rm pdi_myrepo kitchen.sh -rep=my_pdi_repo -dir=/ -job=secondjobDocker run -rm pdi_myrepo pan -rep=my_pdi_repo -dir=/ -trans=subtransSee the Pan and Kitchen user documentation for their full command line reference. The -rm option can be used to automatically remove the Docker container once the job is completed, since this is a batch command and not a long-running service. Abtpeople/pentaho-di.kettle/repositories.xml $KETTLE_HOME/.kettle/repositories.xmlOnce the Docker image has been built, we can run the firstjob job.

0 kommentar(er)

0 kommentar(er)